Let’s assume that the two models give the diagrams’ probabilities, where the blue region represents pass, while the red region represents fail.

Then use the sigmoid function to transform the linear scores to probabilities. Earlier, we discussed that “In deep learning, the model applies a linear regression to each input, i.e., the linear combination of the input features.”Įach model applies the linear regression function(f(x) = wx + b) to each student to generate the linear scores. In this case, we work with four students. We have two models, A and B, that predict the likelihood of the four students passing the exam, as shown in the figure below. Let’s consider the earlier example, where we answer whether a student will pass the SAT exams. We’ll discuss the differences when using cross-entropy in each case scenario. The average level of uncertainty refers to the error.Ĭross-entropy builds upon the idea of information theory entropy and measures the difference between two probability distributions for a given random variable/set of events.Ĭross entropy can be applied in both binary and multi-class classification problems. We can see that the random variable’s entropy is related to our introduction concepts’ error functions. According to Shannon, the entropy of a random variable is the average level of “information,” “surprise,” or “uncertainty” inherent in the variable’s possible outcomes. Cross-entropyĬlaude Shannon introduced the concept of information entropy in his 1948 paper, “A Mathematical Theory of Communication. We’ll now dive deep into the cross-entropy function. Understanding cross-entropy, it was essential to discuss loss function in general and activation functions, i.e., converting discrete predictions to continuous. The above function is the softmax activation function, where i is the class name. We have n classes, and we want to find the probability of class x will be, with linear scores A1, A2… An, to calculate the probability of each class.

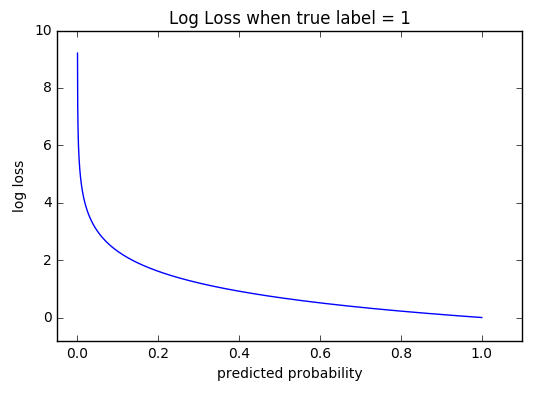

Using probabilities for Illustration 2 will make it easier to sum the error(how far they are from passing) of each student, making it easier to move the prediction line in small steps until we get a minimum summation error.Įxponential converts the probability to a range of 0-1 In this case, the activation function applied is referred to as the sigmoid activation function.īy doing the above, the error stops from being two students who failed SAT exams to more of a summation of each error on the student. Our example is what we call a binary classification, where you have two classes, either pass or fail. How do we ensure that our model prediction output is in the range of (0, 1) or continuous? We apply an activation function to each student’s linear scores. To convert the error function from discrete to continuous error function, we need to apply an activation function to each student’s linear score value, which will be discussed later.įor example, in Illustration 2, the model prediction output determines if a student will pass or fail the model answers the question, will student A pass the SAT exams?Ī continuous question would be, How likely is student A to pass the SAT exams? The answer to this will be 30% or 70% etc., possible. However, in Illustration 1, since the mountain slope is different, we can detect small variations in our height (error) and take the necessary step, which is the case with continuous error functions. If we move small steps in the above example, we might end up with the same error, which is the case with discrete error functions. We apply small steps to minimize the error. In most real-life machine learning applications, we rarely make such a drastic move of the prediction line as we did above. Note that this is not necessarily the case anymore in multilayer neural networks.To solve the error, we move the line to ensure all the positive and negative predictions are in the right area. Loss function, of which the global minimum will be easy to find. P(t=1| z) & = \sigma(z) = \frac \left$ if we sum over all $n$ samples.Īnother reason to use the cross-entropy function is that in simple logistic regression this results in a We can write the probabilities that the class is $t=1$ or $t=0$ given input $z$ as:

, maps the input $z$ to an output between $0$ and $1$ as is illustrated in the figure below. This logistic function, implemented below as The probability $P(t=1 | z)$ that input $z$ is classified as class $t=1$ is represented by the output $y$ of the logistic function computed as $y = \sigma(z)$. The goal is to predict the target class $t$ from an input $z$.

0 kommentar(er)

0 kommentar(er)